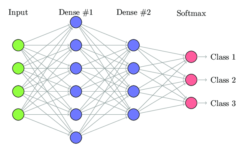

Select Standard (the default setting) to use a logistic function for the transfer function with a range of 0 and 1. This weighted sum is used to compute the hidden node's output using a transfer function, or activation function. As the network learns, these weights are adjusted. This weighted sum is computed with weights that are initially set at random values. The output of the hidden nodes is a weighted sum of the input values. Nodes in the hidden layer receive input from the input layer. Each calculated weight will be multiplied by 1-decay. To prevent over-fitting of the network on the training data, set a weight decay to penalize the weight in each iteration. Keep the default setting of 0 for Weight Decay. Typically error tolerance is a small value in the range from 0 to 1. The error in a particular iteration is backpropagated only if it is greater than the error tolerance. Keep the default setting of 0.01 for Error Tolerance.

In each new round of error correction, some memory of the prior correction is retained so that an outlier does not spoil accumulated learning. Keep the default setting of 0.6 for Weight Change Momentum. Values for the step size typically range from 0.1 to 0.9. A low value produces slow but steady learning, a high value produces rapid but erratic learning. This is the multiplying factor for the error correction during backpropagation it is roughly equivalent to the learning rate for the neural network. Keep the default setting of 0.1 for Gradient Descent Step Size. An epoch is one sweep through all records in the Training Set. Keep the default setting of 30 for # Epochs. If you need the results from successive runs of the algorithm to another to be strictly comparable, set the seed value.

#Neural network using excel solver generator#

If left blank, the random number generator is initialized from the system clock, so the sequence of random numbers will be different in each calculation. Setting the random number seed to a non-zero value, ensures that the same sequence of random numbers is used each time the neuron weight is calculated (default 12345). If an integer value appears for Neuron weight initialization seed, XLMiner uses this value to set the neuron weight random number seed. Since # Hidden Layers is set to 1, only the first text box is enabled. Keep the default setting of 25 for # Nodes Per Laye r. Keep the default setting of 1 for the Hidden Layers option (max 4).

Without normalization, the variable with the largest scale would dominate the measure. Normalizing the data (subtracting the mean and dividing by the standard deviation) is important to ensure that the distance measure accords equal weight to each variable.

Normalize input data is selected by default. CAT.MEDV, is a discrete classification of the MEDV variable and will not be used in this example.Ĭlick Next to advance to the Step 2 of 3 dialog.

#Neural network using excel solver manual#

On the XLMiner ribbon, from the Data Mining tab, select Predict - Neural Network - Manual Network to open the Neural Network Prediction (Manual Arch.) - Step 1 of 3 dialog. Select the Data_Partition worksheet.Īt Output Variable, select MEDV, then from the Selected Variables list, select all remaining variables (except the CAT.MEDV variable). This example will illustrate the use of the Manual Network Architecture selection.

0 kommentar(er)

0 kommentar(er)